Exascale Performance of the Simple Cloud Resolving E3SM Atmosphere Model

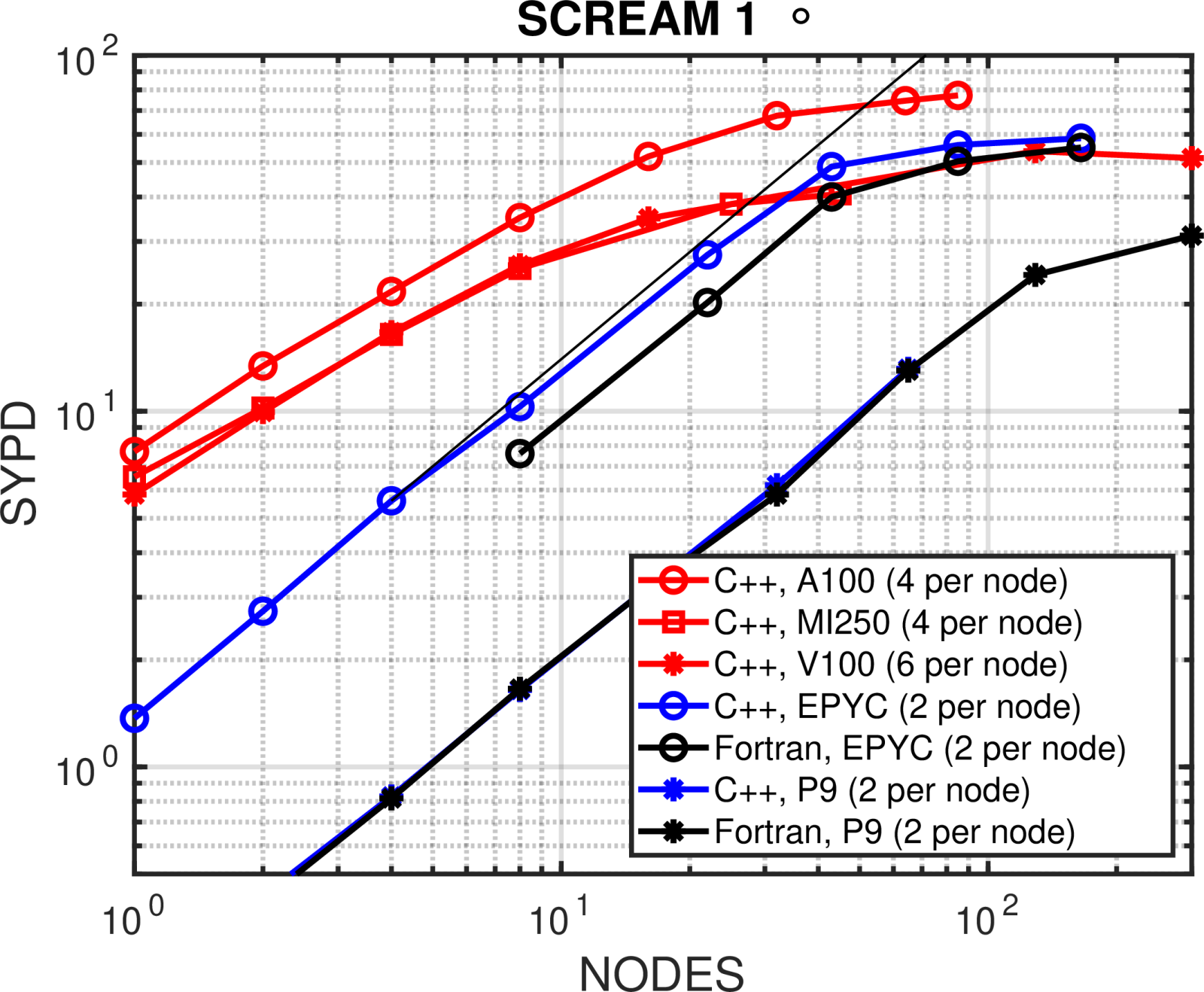

Strong scalings of SCREAMv1 (C++) (red for GPUs and blue for CPUs) and a similarly configured EAM (Fortran) (black lines with symbols, CPUs only) atmosphere models at 110 km (1 degree ) resolution, showing throughput measured in Simulated Years Per Day (SYPD) as a function of compute node count. Perfect scaling is denoted by the thin black line.

Strong scalings of SCREAMv1 (C++) (red for GPUs and blue for CPUs) and a similarly configured EAM (Fortran) (black lines with symbols, CPUs only) atmosphere models at 110 km (1 degree ) resolution, showing throughput measured in Simulated Years Per Day (SYPD) as a function of compute node count. Perfect scaling is denoted by the thin black line.

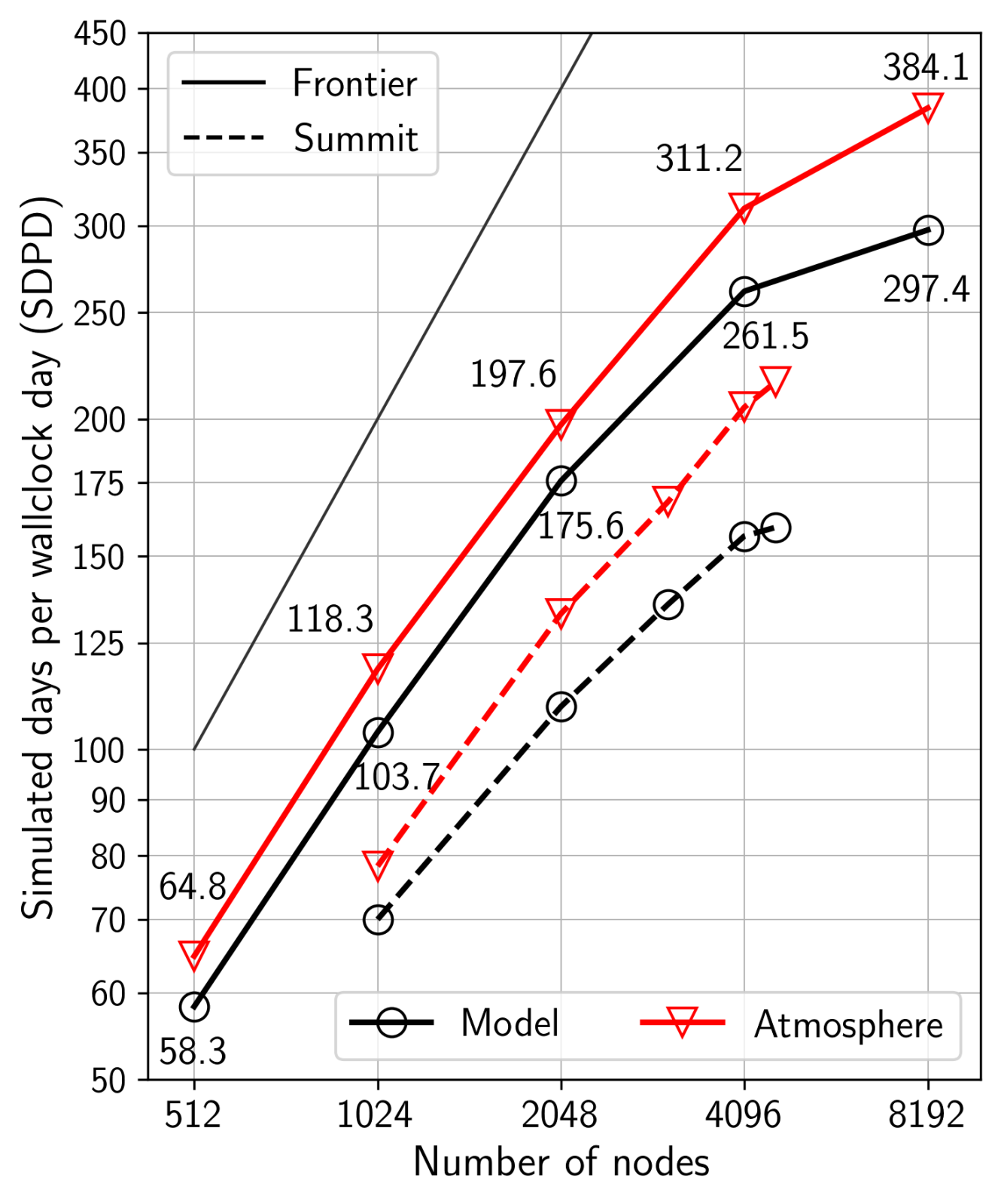

Early access to the first U.S. Exascale computer, OLCF Frontier, coincided with the completion of the Simple Cloud Resolving E3SM Atmosphere Model Version 1 (SCREAMv1). Frontier is not yet open for general access, but through DOE’s Exascale Computing Project (ECP) E3SM developers had a 10-day window to test the performance of the new SCREAMv1 model running at a global 3.25 km resolution with 128 vertical layers and 16 prognostic variables. They obtained record-setting performance, with the atmosphere component (Figure 1, solid red curve) running at greater than 1 simulated-years-per-day (SYPD). This was achieved on 8192 Frontier nodes, each containing 4 AMD MI250 GPUs. The full model (Figure 1, solid black curve), including an active land and ice components with prescribed sea ice extent and sea surface temperatures, runs 29% slower. These benchmarks are for the exact configuration of the model used for the DYAMOND protocol simulations described below.

Cloud resolving models explicitly resolve large convective circulations, removing a key source of uncertainty that is present in traditional climate models. Running at this resolution globally requires tremendous computing resources in order to achieve the integration rates needed for multi-decadal climate simulations. Achieving 1 SYPD on an Exascale machine represents a breakthrough capability that has been a longstanding E3SM goal.

The E3SM Atmosphere Model in C++ (EAMxx): This performance was made possible by years of work developing the EAMxx code base used by SCREAMv1. EAMxx was written from scratch in C++ and uses the Kokkos performance portability programming library. Previous work has shown this approach works well on NVIDIA GPUs in the OLCF pre-exascale Summit system ( Introduction to EAMxx and its Superior GPU Performance) and additional pathfinding/exploratory work conducted on testbed machines bolstered preparations for exascale architectures. The success of this performance-portability strategy was further validated by the team’s ability to quickly get the model up and running on Frontier (which uses new AMD GPUs) during their short access window. Frontier’s new AMD GPUs, improved network interconnect, and increased node count (as compared to Summit) led to nearly doubling SCREAMv1’s peak performance (Figure 1, solid lines show Frontier performance, dashed lines show Summit performance).

SCREAMv1’s use of C++/Kokkos is one of its most unique features within the atmospheric modeling community. The work to transition from Fortran to C++ included first porting E3SM’s nonhydrostatic spectral element dynamical core, followed by porting several atmospheric physics packages, integrating the C++ port of the RRTMGP radiation package (developed by the DOE ECP project with the YAKL performance-portability layer), integrating E3SM’s semi-Lagrangian tracer transport scheme (Islet: Interpolation Semi-Lagrangian Element-Based Transport), and finally creating the atmospheric driver which couples all these components together. This C++/Kokkos approach has proven to be robust and obtain true performance portability across a wide variety of architectures, including Intel, AMD, and IBM CPUs and NVIDIA and AMD GPUs.

Strong scalings of SCREAMv1 (C++) (red for GPUs and blue for CPUs) and a similarly configured EAM (Fortran) (black lines with symbols, CPUs only) atmosphere models at 110 km (1 degree ) resolution, showing throughput measured in Simulated Years Per Day (SYPD) as a function of compute node count. Perfect scaling is denoted by the thin black line.

Strong scalings of SCREAMv1 (C++) (red for GPUs and blue for CPUs) and a similarly configured EAM (Fortran) (black lines with symbols, CPUs only) atmosphere models at 110 km (1 degree ) resolution, showing throughput measured in Simulated Years Per Day (SYPD) as a function of compute node count. Perfect scaling is denoted by the thin black line.

To further evaluate SCREAMv1’s performance portability, the team configured a lower resolution (110 km) of the full model. This version, as well as the original CPU-only E3SM Atmosphere Model (EAM) were then run on a wide variety of computer architectures. Strong-scaling results from this 110 km version of the model are shown in Figure 2, which presents results from the OLCF Summit system (with P9 CPUs and V100 GPUs), NERSC’s Perlmutter (with AMD EPYC CPUs and NVIDIA A100 GPUs), and an OLCF testbed containing nodes identical to the Frontier system (MI250 GPUs). Comparing the EAM and SCREAMv1 results on CPUs (blue and black curves in the figure, labeled “C++” for SCREAMv1 and “Fortran” for EAM, and “P9” or “EPYC” for Summit versus Perlmutter CPUs, respectively), the data shows that SCREAMv1 is as fast or faster than the older EAM Fortran code base, establishing that it is a competitive replacement for EAM on CPUs. SCREAMv1 is most performant when taking advantage of GPUs (red curves in the figure).

For nodes with GPUs, there is a large range of node counts where GPU nodes significantly outperform CPU-only nodes, and SCREAMv1 obtains good performance across several different GPUs. At this relatively coarse 110 km resolution, the number of nodes can be increased well past the limits of strong scaling, where adding additional nodes has reached a point of diminishing returns.

This is shown by the flattening of the curves in Figure 2 as the node counts is increased. At this limit-of-strong-scaling, there is very little work per node. In this regime, GPU nodes remain competitive with CPU-nodes but lose much of their speedup and become less efficient once power considerations are taken into account.